环境准备

本文使用环境为 kubernetes v1.23.0 + istio 1.17.0

部署简易程序

部署两个版本的微服务

本文将在k8s中部署一个Python Flask编写的简易微服务,代码如下:

from flask import Flask, request

import os

from urllib.request import Request, urlopen

import json

app = Flask(__name__)

@app.route('/')

def i_am_fine():

return "I am FINE!"

@app.route('/env/<env>')

def show_env(env):

return os.environ.get(env)

@app.route('/fetch')

def fetch_url():

url = request.args.get('url', '')

with urlopen(url) as response:

return response.read()

if __name__ == "__main__":

app.run(host="0.0.0.0", port=80, debug=True)代码中的env会在创建Deployment的时候作为环境变量提交上去。

现在为该服务创建k8s资源:flaskapp.istio.yaml

apiVersion: v1

kind: Service

metadata:

name: flaskapp

labels:

app: flaskapp

spec:

selector:

app: flaskapp

ports:

- name: http

port: 80

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: flaskapp-v1

spec:

replicas: 1

selector:

matchLabels:

app: flaskapp

version: v1

template:

metadata:

labels:

app: flaskapp

version: v1

spec:

containers:

- name: flaskapp

image: dustise/flaskapp

imagePullPolicy: IfNotPresent

env:

- name: version

value: v1

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: flaskapp-v2

spec:

replicas: 1

selector:

matchLabels:

app: flaskapp

version: v2

template:

metadata:

labels:

app: flaskapp

version: v2

spec:

containers:

- name: flaskapp

image: dustise/flaskapp

imagePullPolicy: IfNotPresent

env:

- name: version

value: v2说明:

- 两个版本的Deployment用的是相同的镜像,但使用不同的version标签进行区分,分别是v1和v2

- 两个版本的Deployment都设置了一个环境变量version,分别取值v1和v2

- 两个版本的Deployment都使用了app和version标签,在istio网格应用中通常会使用这两个标签作为应用和版本的标识

- Service中的selector仅使用了一个app标签,意味着该Service对两个Deployment都会进行转发

- Service中定义的端口一般按istio规范命名为http

现在需要将该服务提交到k8s的时候对其进行istio注入,有注入两种方法:

自动注入

需要为服务所在的命名空间打上标签istio-injection=enabled

kubectl label namespace default istio-injection=enabled手动注入

istioctl kube-inject -f flaskapp.istio.yaml | kubectl apply -f -过一会查看Pod状态

kubectl get pods -n default

NAME READY STATUS RESTARTS AGE

flaskapp-v1-6d5d7ffb9f-5q6v8 2/2 Running 0 20h

flaskapp-v2-8647744b6-dlnrb 2/2 Running 0 20h每个Pod里有两个容器,这就是istio注入Sidecar的结果,除了一个初始化容器istio-init外,还有两个容器:一个是istio-proxy,另一个是原本的服务

部署客户端微服务

客户端服务很简单,只是使用了一个已装好各种测试工具的镜像,具体的测试都可以在这个容器内shell完成。准备好资源文件:sleep.yaml

apiVersion: v1

kind: Service

metadata:

name: sleep

labels:

app: sleep

version: v1

spec:

selector:

app: sleep

version: v1

ports:

- name: ssh

port: 80

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: sleep

spec:

replicas: 1

selector:

matchLabels:

app: sleep

version: v1

template:

metadata:

labels:

app: sleep

version: v1

spec:

containers:

- name: sleep

image: dustise/sleep

imagePullPolicy: IfNotPresent注意这个客户端应用并没有对外提供服务的能力,但还是需要给他创建一个Service对象,这同样是istio的注入要求:没有Service的Deployment是无法被istio发现并进行操作的。

同样的对该文件进行注入并提交:

istioctl kube-inject -f sleep.yaml | kubectl apply -f -过一会查看Pod状态

kubectl get pods -l app=sleep -n default

NAME READY STATUS RESTARTS AGE

sleep-6bf6676dcc-6nvnm 2/2 Running 0 21h验证服务

下来进入sleep容器(如果有kubernetes-dashboard也可以通过界面进入)并进行测试

kubectl exec -it sleep-6bf6676dcc-6nvnm /bin/bash

kubectl exec [POD] [COMMAND] is DEPRECATED and will be removed in a future version. Use kubectl exec [POD] -- [COMMAND] instead.

bash-5.0## for((i=0; i<100; i++)); do http --body http://flaskapp/env/version; done

v2

v1

v2

……从运行结果可以看到v1和v2两个结果随机出现,大约各占一半

创建目标规则和默认路由

接下来使用istio来管理这两个服务的流量

首先创建flaskapp应用的目标规则:flaskapp-destinationrule.yaml

apiVersion: networking.istio.io/v1beta1

kind: DestinationRule

metadata:

name: flaskapp

spec:

host: flaskapp

subsets:

- name: v1

labels:

version: v1

- name: v2

labels:

version: v2该DestinationRule通过Pod标签把flaskapp服务分成两个subset,分别命名为v1和v2

提交该文件:

kubectl apply -f flaskapp-destinationrule.yaml接下来为flaskapp服务创建路由规则:flaskapp-virtualservice-v2.yaml

apiVersion: networking.istio.io/v1beta1

kind: VirtualService

metadata:

name: flaskapp-default-v2

spec:

hosts:

- flaskapp

http:

- route:

- destination:

host: flaskapp

subset: v2该VirtualService对象负责接管对flaskapp这一主机名的访问,并将所有流量都转发到DestinationRule定义的v2 subset上。

提交该文件:

kubectl apply -f flaskapp-virtualservice-v2.yaml现在进入sleep测试,看看重新定义的流量管理规则是否生效:

# kubectl exec -it sleep-6bf6676dcc-6nvnm /bin/bash

# kubectl exec [POD] [COMMAND] is DEPRECATED and will be removed in a future version. Use kubectl exec [POD] -- [COMMAND] instead.

bash-5.0# for((i=0; i<100; i++)); do http --body http://flaskapp/env/version; done

v2

v2

v2

……可以看到路由规则已经生效,重复多次访问返回的永远是v2

Istio Dashboard

作为一个云原生的服务网格组件,istio必须提供可观测的相关功能。通过istioctl命令可以看到istio默认提供了以下几种dashboard:

## istioctl dashboard

Access to Istio web UIs

Usage:

istioctl dashboard [flags]

istioctl dashboard [command]

Aliases:

dashboard, dash, d

Available Commands:

controlz Open ControlZ web UI

envoy Open Envoy admin web UI

grafana Open Grafana web UI

jaeger Open Jaeger web UI

kiali Open Kiali web UI

prometheus Open Prometheus web UI

skywalking Open SkyWalking UI

zipkin Open Zipkin web UI注意新版的istio已经不再默认安装各类dashboard,必须自己手动部署

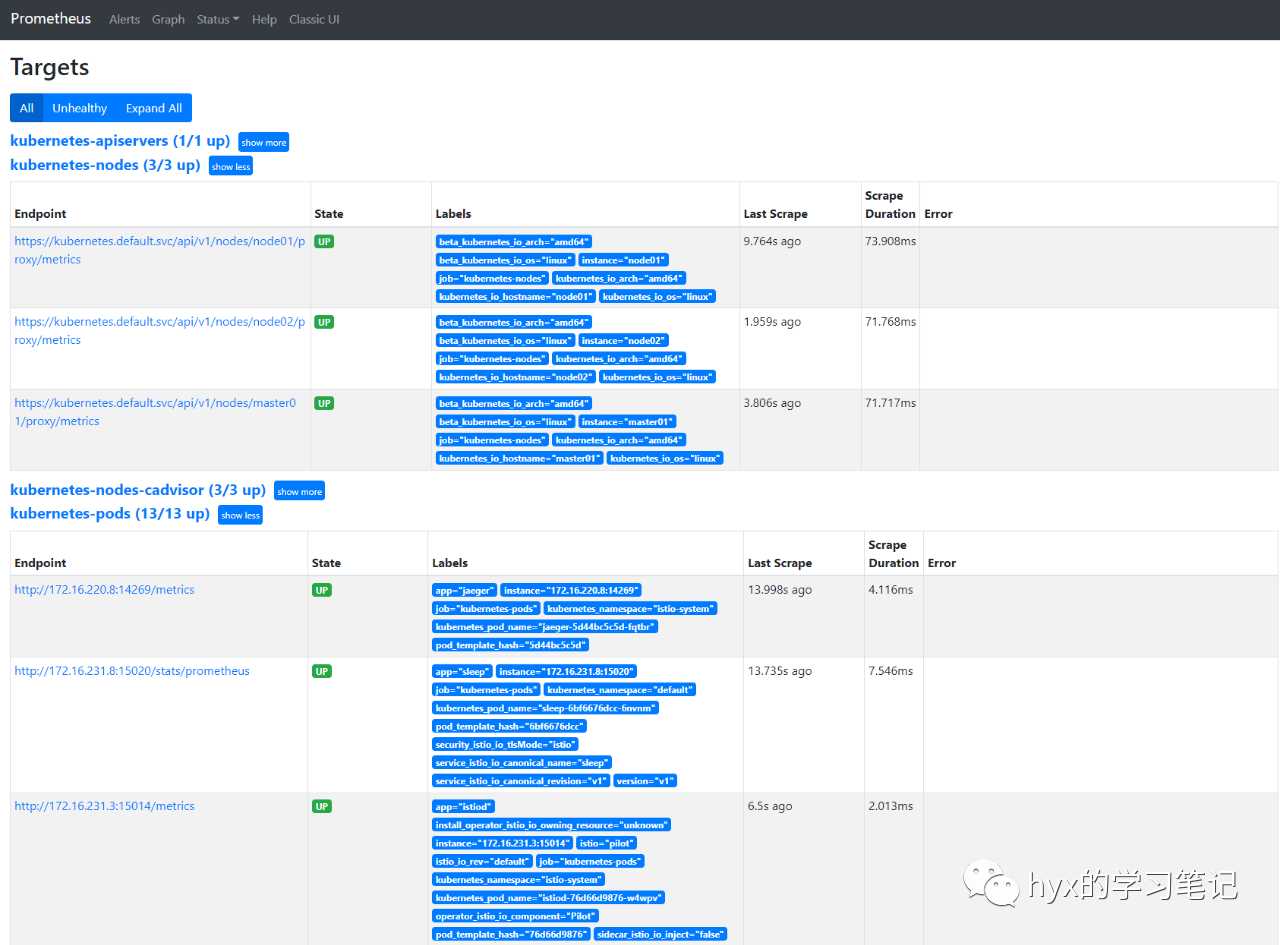

部署Prometheus

手动提交yaml到kubernetes

# cd /opt/istio-1.12.1/samples/addons

# kubectl apply -f prometheus.yaml

# kubectl get pods -l app=prometheus -n istio-system

NAME READY STATUS RESTARTS AGE

prometheus-64fd8ccd65-6n6qt 2/2 Running 0 17h启动prometheus dashboard监听

# istioctl dashboard prometheus --address=192.168.91.10

http://192.168.91.10:9090打开prometheus页面的targets发现istiod的15014端口connection refused,编辑deployment istio-operator

kubectl edit deploy istio-operator -n istio-operator找到--monitoring-host=127.0.0.1将其改为--monitoring-host=0.0.0.0

再次打开prometheus页面targets

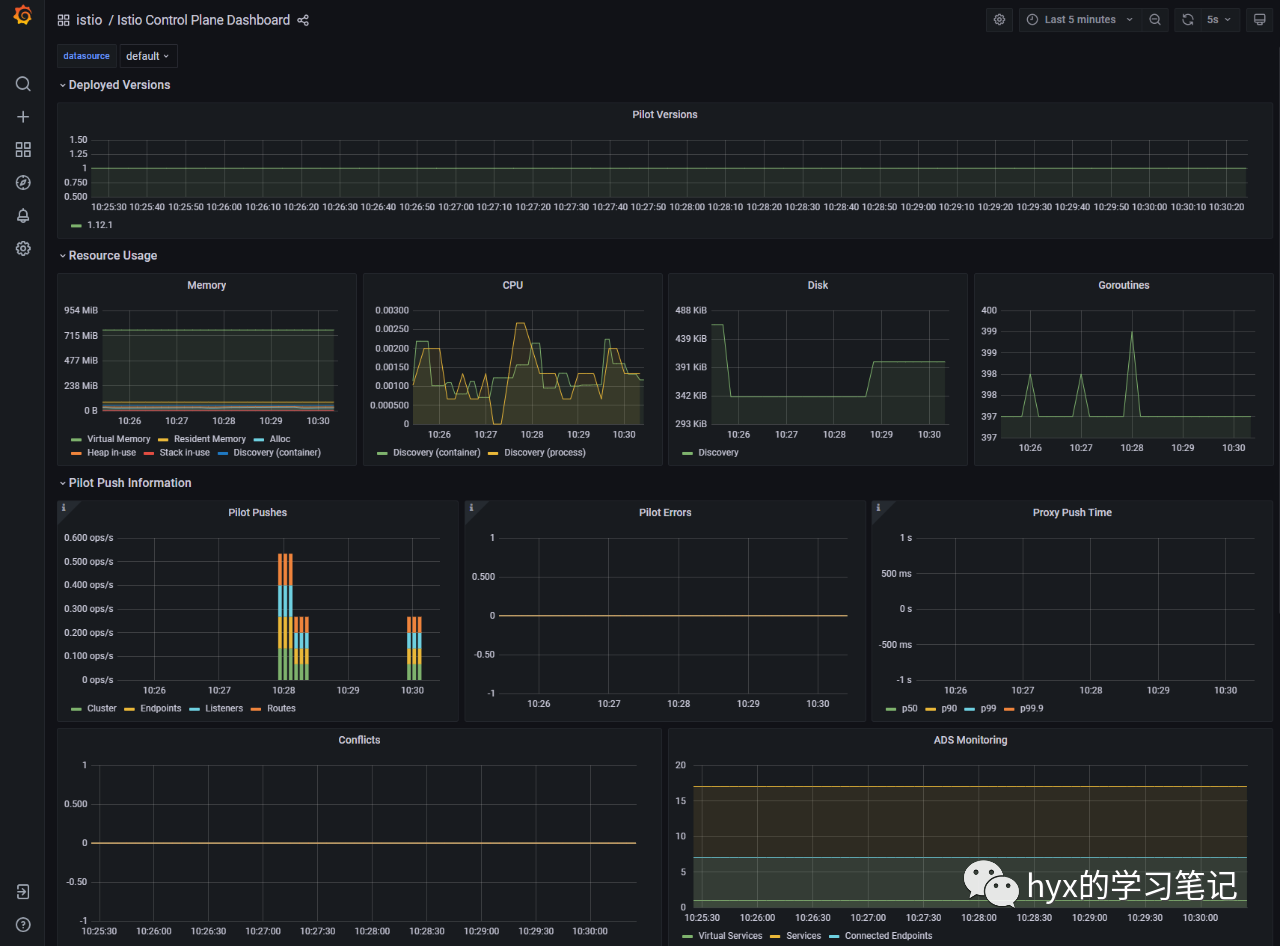

部署Grafana

手动提交yaml到kubernetes

# cd /opt/istio-1.12.1/samples/addons

# kubectl apply -f grafana.yaml

# kubectl get pods -l app=grafana -n istio-system

NAME READY STATUS RESTARTS AGE

grafana-6ccd56f4b6-vz7k5 1/1 Running 0 16h启动grafana dashboard监听

# istioctl dashboard grafana --address=192.168.91.10

http://192.168.91.10:3000

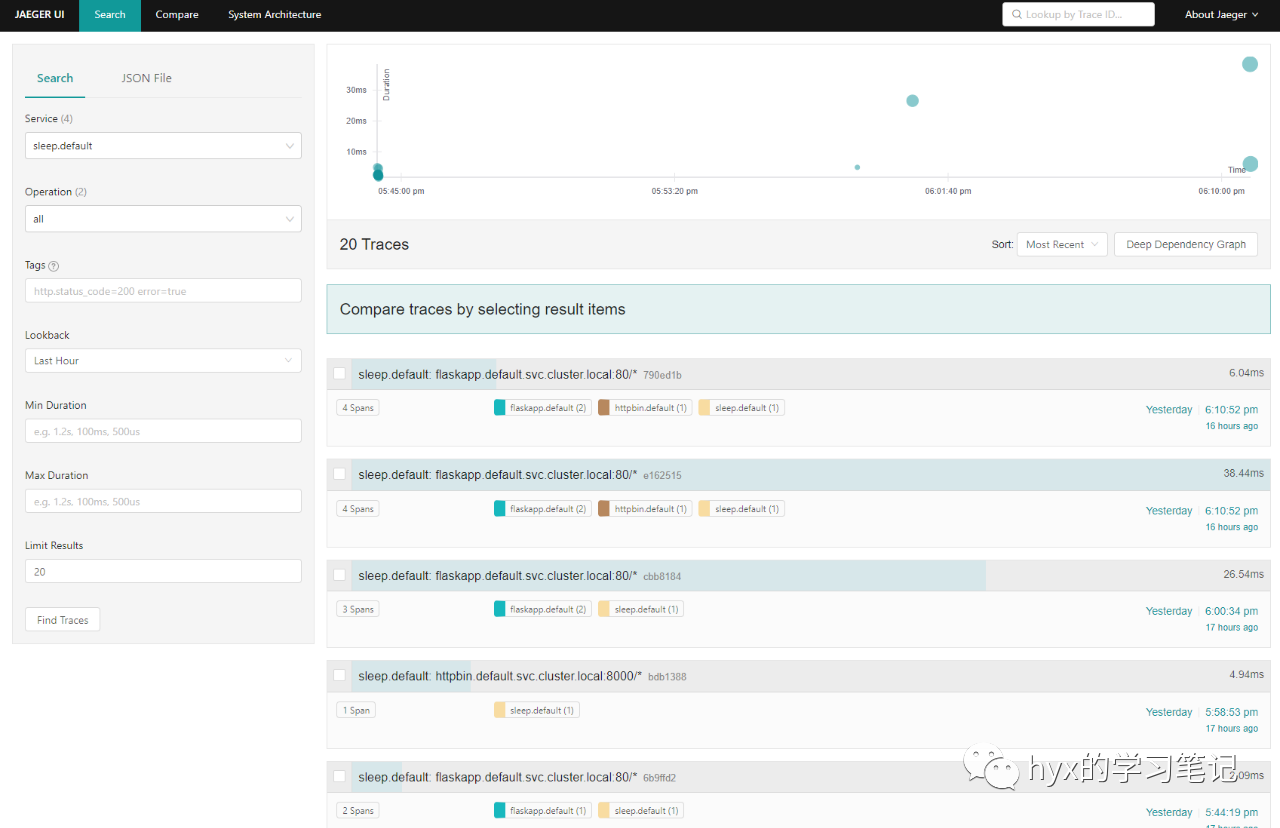

部署Jaeger

手动提交yaml到kubernetes

# cd /opt/istio-1.12.1/samples/addons

# kubectl apply -f jaeger.yaml

# kubectl get pods -l app=jaeger -n istio-system

NAME READY STATUS RESTARTS AGE

jaeger-5d44bc5c5d-fqtbr 1/1 Running 0 16h启动jaeger dashboard监听

# istioctl dashboard jaeger --address=192.168.91.10

http://192.168.91.10:16686

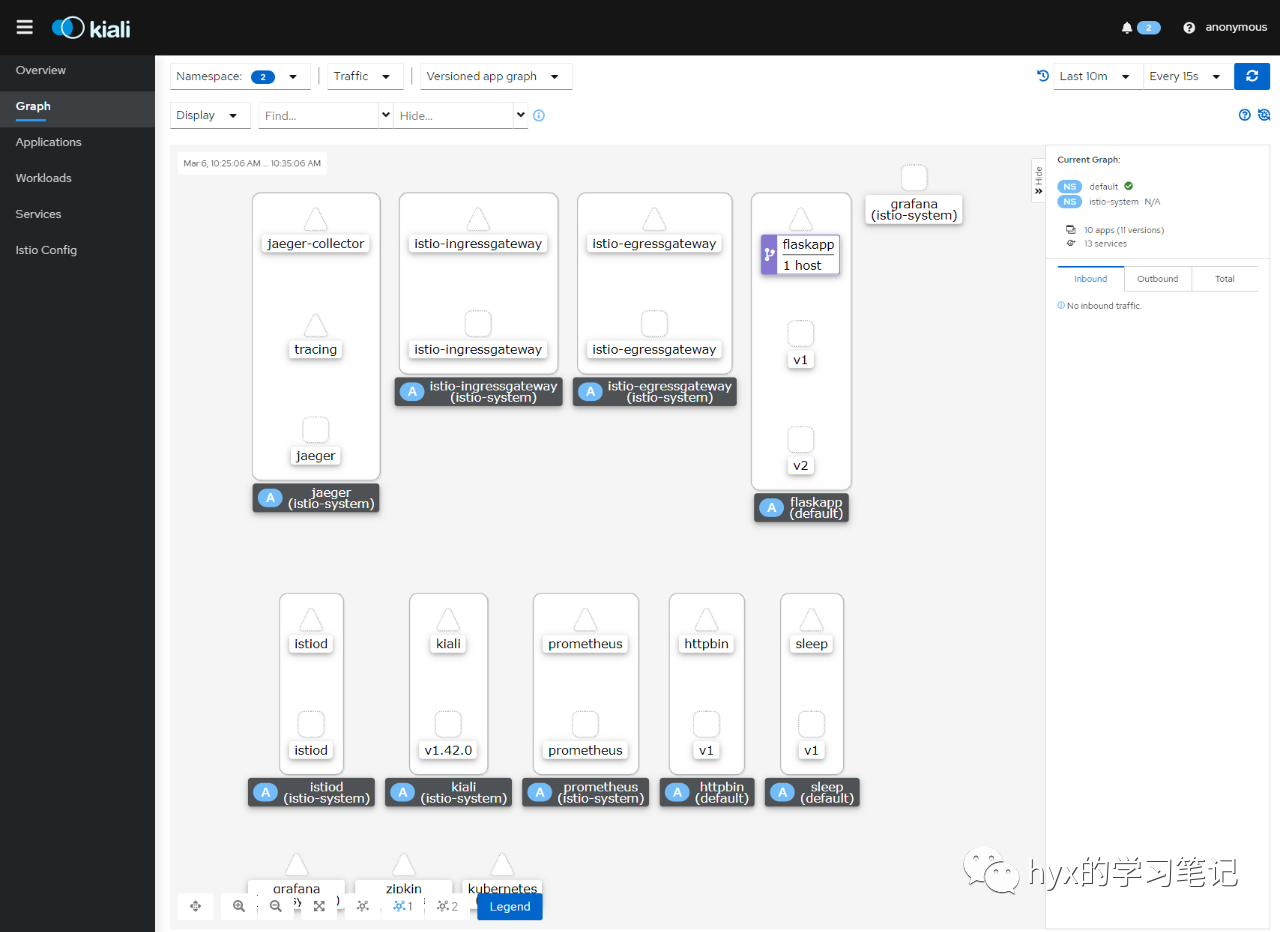

部署Kiali

手动提交yaml到kubernetes

# cd /opt/istio-1.12.1/samples/addons

# kubectl apply -f kiali.yaml

# kubectl get pods -l app=kiali -n istio-system

NAME READY STATUS RESTARTS AGE

kiali-79b86ff5bc-skwp8 1/1 Running 0 16h启动kiali dashboard监听

# istioctl dashboard kiali --address=192.168.91.10

http://192.168.91.10:20001/kiali

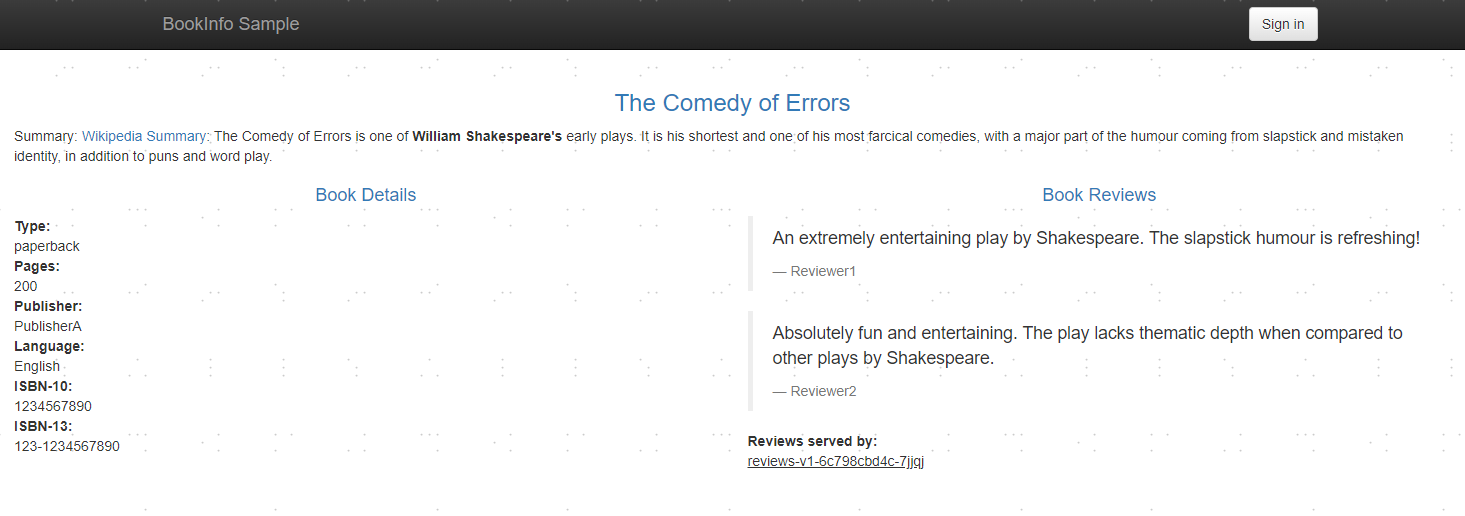

部署Bookinfo示例程序

- 进入istio-1.17.0/samples/bookinfo/目录部署应用

# cd istio-1.17.0/

# kubectl apply -f samples/bookinfo/platform/kube/bookinfo.yaml等待一段直到所有Pod都running。当每个Pod准备就绪时,Istio sidecar将伴随应用一起部署。

- 确认上面的操作都正确之后,运行下面命令,通过检查返回的页面标题来验证应用是否已在集群中运行,并已提供网页服务:

# kubectl exec "$(kubectl get pod -l app=ratings -o jsonpath='{.items[0].metadata.name}')" -c ratings -- curl -sS productpage:9080/productpage | grep -o "<title>.*</title>"

<title>Simple Bookstore App</title>- 对外开放应用程序

此时,BookInfo应用已经部署,但还不能被外界访问。要开放访问,还需要创建Istio入站网关(Ingress Gateway),它会在网格边缘把一个路径映射到路由。

# kubectl apply -f samples/bookinfo/networking/bookinfo-gateway.yaml

gateway.networking.istio.io/bookinfo-gateway created

virtualservice.networking.istio.io/bookinfo created可用下面命令确保配置文件没有问题

# istioctl analyze

✔ No validation issues found when analyzing namespace: default.- 确定入站IP和端口。查看service发现,默认ingressgateway使用的是LoadBalancer类型,而我们的集群暂不提供LoadBalancer,此时EXTERNAL-IP的地方显示的是pending,需要把它改成NodePort

# kubectl get svc -n istio-system

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

grafana ClusterIP 10.97.23.53 <none> 3000/TCP 90m

istio-egressgateway ClusterIP 10.99.30.204 <none> 80/TCP,443/TCP 44d

istio-ingressgateway LoadBalancer 10.111.102.172 <pending> 15021:31849/TCP,80:30464/TCP,443:31104/TCP,31400:30828/TCP,15443:30440/TCP 44d

istiod NodePort 10.108.227.110 <none> 15010:32579/TCP,15012:32125/TCP,443:30219/TCP,15014:32647/TCP 44d

jaeger-collector ClusterIP 10.105.82.176 <none> 14268/TCP,14250/TCP,9411/TCP 87m

kiali ClusterIP 10.106.90.206 <none> 20001/TCP,9090/TCP 85m

prometheus ClusterIP 10.97.146.99 <none> 9090/TCP 4h16m

tracing ClusterIP 10.100.227.136 <none> 80/TCP,16685/TCP 87m

zipkin ClusterIP 10.110.162.221 <none> 9411/TCP 87m编辑service istio-ingressgateway,将type: LoadBalancer改为type: NodePort

# kubectl edit svc istio-ingressgateway -n istio-system改完以后

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

grafana ClusterIP 10.97.23.53 <none> 3000/TCP 165m

istio-ingressgateway NodePort 10.111.102.172 <none> 15021:31849/TCP,80:30464/TCP,443:31104/TCP,31400:30828/TCP,15443:30440/TCP 44d查看istio-ingressgateway的yaml,名称为http2的端口:

# kubectl get svc istio-ingressgateway -n istio-system -o jsonpath="{.spec.ports[?(@.name=='http2')].nodePort}";echo

30464于是gateway的访问地址是:http://192.168.126.100:30464

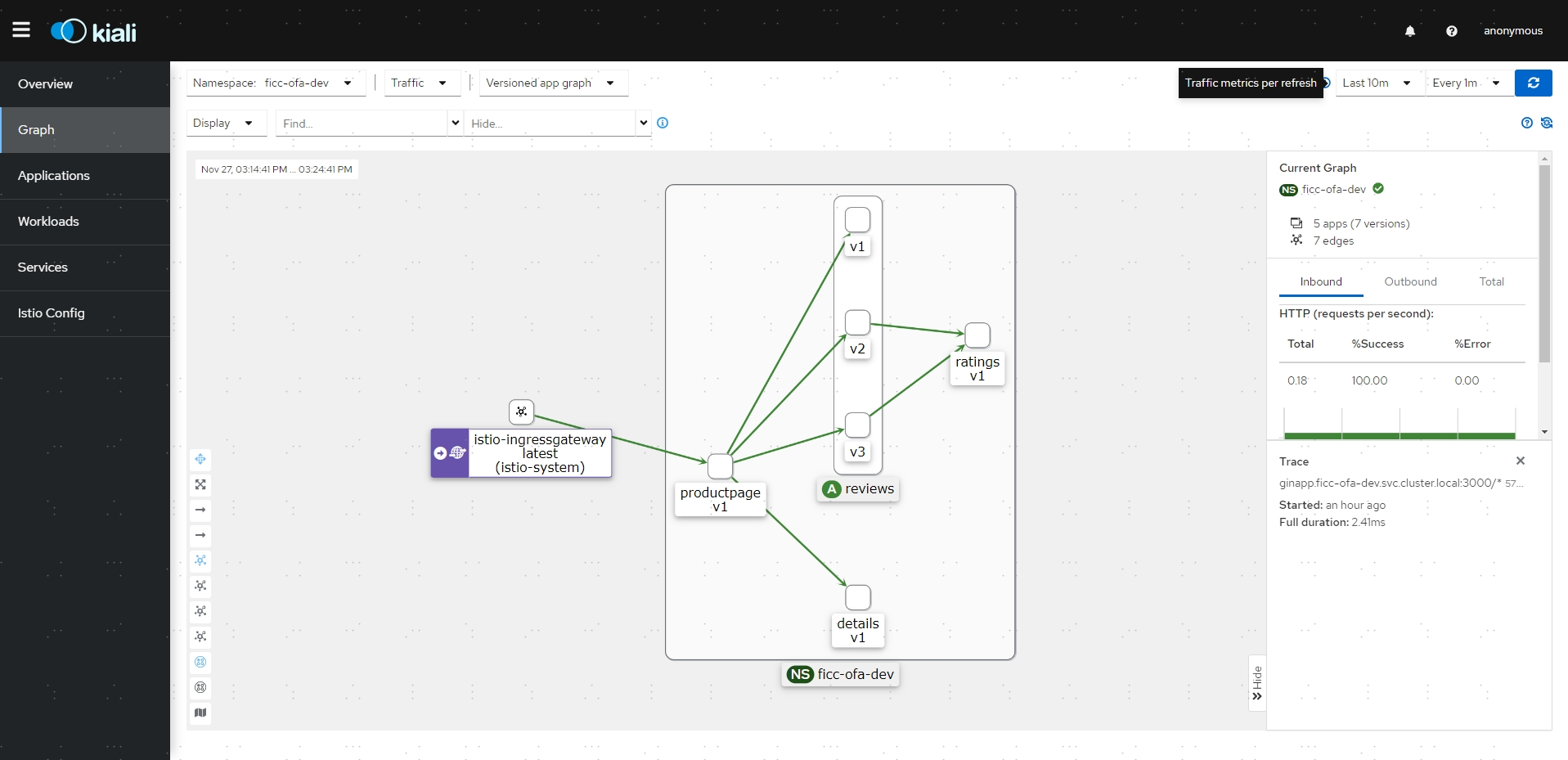

- 在K8S集群外,访问Bookinfo应用100次:

# for i in $(seq 1 100); do curl -s -o /dev/null "http://192.168.126.100:30464/productpage"; done-

打开kiali,可以看到访问链路图

-

打开浏览器可以看到Bookinfo应用的前端页面